|

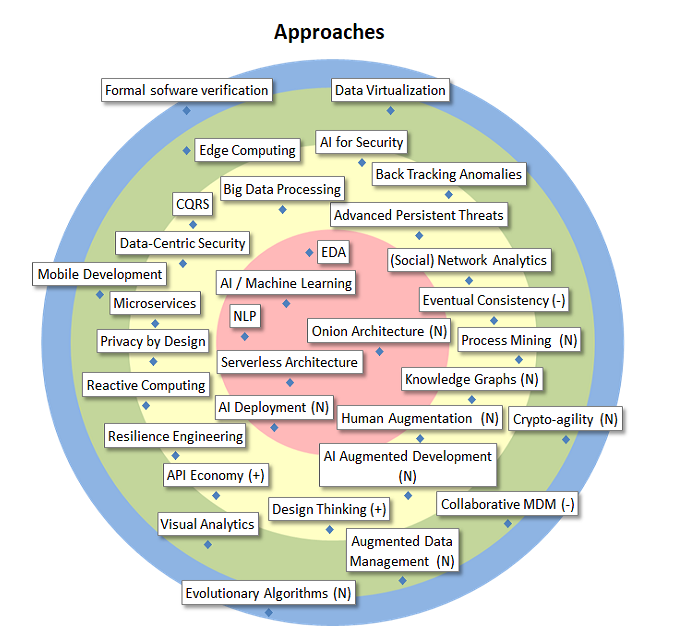

AI / Machine Learning |

AI is the broader concept of machines acting in a way that we would consider “smart”. Machine Learning is a form of AI based on giving machines access to data and let them learn for themselves. Includes neural networks, deep learning, language processing. A possible application is fraud detection. |

|

AI Deployment |

In machine learning ‘by hand’, a lot of time is lost between training a model and putting it in production, to then wait for feedback for potential retraining. CD4ML (continuous delivery for ML) attempts to automate this process. |

|

EDA |

An Event Driven Architecture can offer many advantages over more traditional approaches. Events and Asynchronous communication can make a system much more responsive and efficient. Moreover, the event model often better resembles the actual business data coming in. |

|

NLP |

Natural Language Processing, part of AI, includes techniques to distill information from unstructured textual data, with the aim of using that information inside analytics algorithms. Used for text mining, sentiment analysis, entity recognition, natural language generation (NLG)… |

|

Onion Architecture |

Also ‘Hexagonal Architecture’: a set of architectural principles making the domain model code central to everything and dependant on no other code or framework. Other aspects of the program code can be dependant on the domain code. Gained a lot of popularity in the community recently |

|

Serverless Architecture |

This architecture allows to simply send code, based on handler templates, to a ‘Function Platform as a Service’ (FPaaS). It can then immediately be run without any server or middleware setup. Scalability, robustness, and many other non-fuctional requirements are handled by the platform. |

|

(Social) Network Analytics |

Social network analytics (SNA) is the process of investigating social structures (i.e., relations between people and other entities such as companies, addresses, …) by the use of network and graph theory, as well as concepts from sociology. |

|

Advanced Persistent Threats |

New type of threats used to perpetrate a long-term computer attack on a well-defined target. Closely resembles industrial espionage techniques. |

|

AI Augmented Development |

Use of AI and NLP in the development environment: debugging, testing (mutation, fuzzing), generation of code/documentation, recommendations for refactoring, … |

|

AI for Security |

Non-traditional methods improving analysis methods in the security technology of systems and applications (e.g. user behaviour analytics). |

|

API Economy |

API’s, to connect services within and across multiple systems, or even to 3rd parties, are becoming prevalent and push a new business model, centered around the integration of readily available data and services. They also help with loose coupling between components. |

|

Back Tracking Anomalies |

Method to detect causes of data quality problems in data flows between information systems and to improve them structurally. ROI is Important and facilitates a win-win approach between institutions. To monitor the anomalies and transactions an extension to the existing DBMS has to be built. |

|

Big Data Processing |

Big data analyics solutions require architecture, which 1) has the calculations executed where data is stored, 2) spreads data and calculations over several nodes, and 3) uses a data warehouse architecture that makes all types of data available for analytical tools in a transparent way. |

|

CQRS |

Command and Query Responsability Segregation: keep the parts of a system that handle querying and the parts that handle state changes separate. This allows for more flexible and scalable distributed architectures. |

|

Data-Centric Security |

Approach to protect sensitive data uniquely and centrally, regardless of format or location (using e.g. data anonymization or tokenization technologies in conjunction with centralized policies and governance). |

|

Design Thinking |

Used to improve the design from software to products and services. Empathy for users and the gathering of insight into their needs and motivations is crucial. Used for digital innovation with focus on people. Closely related to Human-Centered Design and the uptake of (government) applications. |

|

Eventual Consistency |

A general way to evolve systems away from too restricitive ACID principles. Using this, and pushing it through on a business level, are the only way to keep systems evolving towards a more distributed, scalable, flexible, and maintainable lifecycle. |

|

Human Augmentation |

Enhancement of human capabilities using technology and science. Can be very futuristic (e.g. brain implants) but intelligent glasses could be a realistic physical augmentation. Cognitive augmentation (a human’s ability to think and make better decisions) will be made possible thanks to AI. |

|

Knowledge Graphs |

Knowledge graph semantically enhance information retrieval systems (e.g. search engines). They are now also used together with NLP to improve conversational agents or Q&A systems. At the other hand, NLP techniques such as NER are used to feed or build knowledge graphs. |

|

Microservices |

Independently maintainable and deployable services, which are kept very small (hence, ‘micro-‘), make an application, or even large groups of related systems, much more flexibly scalable, and provide functional agility, which allows a system to rapidly support new business opportunities. |

|

Privacy by Design |

Privacy by design calls for privacy to be taken into account throughout the whole engineering process. The European GDPR regulation incorporates privacy by design. An example of an existing methodology is LINDDUN. |

|

Process Mining |

Includes automated process discovery (extracting process models from an event log from an information system), and offers also possibilities to monitor, check and improve processes. Often used in preparation of RPA and other business process initiatives (context digital transformation). |

|

Reactive Computing |

The flow of (incoming) data, and not an application’s (or cpu’s) regular control flow, govern its architecture and runtime. This a new paradigm, sometimes even driven by new hardware, and opposes the traditional way of working with fluxes. Also known as Dataflow Architecture and related to EDA. |

|

Resilience Engineering |

Resilience/robustness is the ability of a system to cope with errors during execution and with erroneous input. Example techniques are fuzz testing, mutation testing, fault injection or chaos engineering. Is often part of DevOps. |

|

Augmented Data Management |

Through the addition of AI and machine learning, data management manual tasks will be reduced considerably. Different products will be ‘augmented’, such as data quality, metadata management, master data management, data integration and database management systems. |

|

Collaborative MDM |

Collaborative and organized management of anomalies stemming from authentic sources, by their official users. |

|

Crypto-agility |

Crypto-agility allows an information security system to switch to alternative cryptographic primitives and algorithms without making significant changes to the system’s infrastructure. Crypto-agility facilitates system upgrades and evolution. |

|

Data Virtualization |

Methods and tools to access databases with heterogeneous models and to facilitate access for users using a virtual logical view. |

|

Edge Computing |

Information processing and content collection and delivery are placed closer to the endpoints to fix high WAN costs and unacceptable latency of the cloud. Also in context of AI solutions, edge computing becomes more relevant. |

|

Mobile Development |

Set of techniques, tools and platforms to develop web based and platform-specific mobile applications. |

|

Visual Analytics |

Methodology and enabling tools allowing to combine data visualization and analytics. Allows rapidly exploring, analyzing, and forecasting data. This helps modeling in advanced analytics, and to make modern, interactive, self-service BI applications. |

|

Evolutionary Algorithms |

Within AI, a family of algorithms focusing on biology in order to ‘evolve’ towards a better solution to a problem. |

|

Formal sofware verification |

Formal verification is the act of proving or disproving the correctness of intended algorithms using formal methods of mathematics. Formal verification may be usefeul for critical parts of software, such as cryptographic implementations. |